Understanding Types of Environments in Artificial Intelligence

In our previous post, we explored Types of Agents in Artificial Intelligence and how they interact with their surroundings. To function effectively, these agents rely on the nature of the environment they operate in. The type of environment significantly impacts the design, complexity, and intelligence level of the agent.

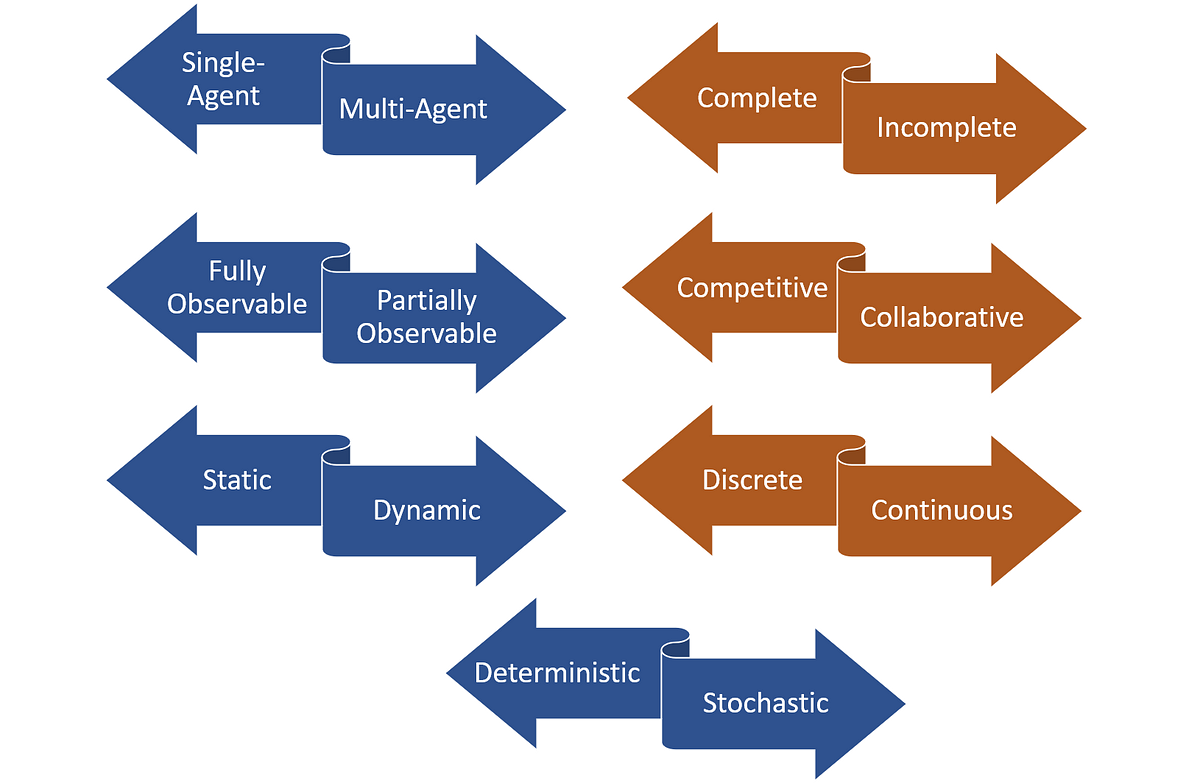

In this post, we’ll explore six key environment classifications:

- Fully Observable vs. Partially Observable

- Single Agent vs. Multi-Agent

- Deterministic vs. Stochastic

- Episodic vs. Sequential

- Static vs. Dynamic

- Discrete vs. Continuous

Let’s dive in!

1. Fully Observable vs. Partially Observable Environments

✅ Fully Observable Environment

In a fully observable environment, the agent’s sensors can access the complete state of the environment at any time. This means there is no hidden or missing information relevant to decision-making.

📌 Example:

- A game of chess where both players can see the full board.

🔑 Key Points:

- Simplifies agent design since it doesn’t need to guess or infer hidden data.

- Planning becomes more accurate and reliable.

❌ Partially Observable Environment

In a partially observable environment, the agent receives incomplete or noisy information. It doesn’t have full visibility into the environment’s current state.

📌 Example:

- A self-driving car that cannot see around a sharp corner or in heavy fog.

🔑 Key Points:

- Requires internal models or memory to maintain state estimates.

- Leads to uncertainty in decision-making.

2. Single Agent vs. Multi-Agent Environments

👤 Single Agent Environment

In this environment, only one agent is acting and making decisions, and it does not need to interact with others.

📌 Example:

- A robot vacuum cleaning a room without any other agents present.

🔑 Key Points:

- No competition or cooperation involved.

- Easier to model and control.

👥 Multi-Agent Environment

Here, multiple agents exist, and they may either cooperate, compete, or coexist.

📌 Examples:

- Multiple autonomous drones navigating the same airspace.

- Online multiplayer games with multiple human or AI players.

🔑 Key Points:

- Agents must consider others’ actions and strategies.

- Can be cooperative (e.g., rescue robots) or competitive (e.g., auction systems).

3. Deterministic vs. Stochastic Environments

🧩 Deterministic Environment

In a deterministic environment, the outcome of every action is predictable. There’s no randomness involved; the next state is solely determined by the current state and agent’s action.

📌 Example:

- Solving a maze with defined walls and clear paths.

🔑 Key Points:

- Easier for agents to plan actions.

- No need for probabilistic reasoning.

🎲 Stochastic Environment

A stochastic environment includes randomness or uncertainty. The same action can lead to different outcomes depending on probability.

📌 Example:

- Weather forecasting: even with the same data, results can vary.

🔑 Key Points:

- Agents need to model uncertainty and use probabilistic reasoning.

- Often requires learning from experience or simulations.

4. Episodic vs. Sequential Environments

🎯 Episodic Environment

In this environment, each agent’s decision is based on an individual episode, which is independent of others.

📌 Example:

- A spam filter processes one email at a time; previous emails don’t affect the decision.

🔑 Key Points:

- Simple and fast decision-making.

- No need to consider long-term consequences.

🔗 Sequential Environment

In a sequential environment, the current decision influences future decisions and states.

📌 Example:

- Playing a video game or navigating a robot through a city.

🔑 Key Points:

- Requires memory and planning.

- Long-term strategy is important.

5. Static vs. Dynamic Environments

🛑 Static Environment

The environment remains unchanged while the agent is deliberating or performing actions.

📌 Example:

- A crossword puzzle on paper remains static until the player acts.

🔑 Key Points:

- No urgency; agent has unlimited time to decide.

- Ideal for offline planning.

⚡ Dynamic Environment

In a dynamic environment, changes occur while the agent is thinking or acting.

📌 Examples:

- Traffic situations for autonomous cars.

- Stock market trading.

🔑 Key Points:

- Requires real-time perception and reaction.

- Often combined with partial observability.

6. Discrete vs. Continuous Environments

🔢 Discrete Environment

A discrete environment consists of clearly defined states and actions that are countable.

📌 Examples:

- Board games like chess or checkers.

🔑 Key Points:

- Can be easily modeled using logical or rule-based approaches.

- Good for symbolic reasoning.

📈 Continuous Environment

A continuous environment includes infinite or real-valued states and actions.

📌 Examples:

- Controlling a drone’s flight in 3D space.

- Temperature control systems.

🔑 Key Points:

- Requires mathematical modeling and calculus.

- Often uses control theory or machine learning for optimization.

🧠 Why It Matters

Understanding environment types helps AI designers:

- Choose the right type of agent (reactive, learning, etc.)

- Select suitable algorithms (rule-based, probabilistic, or learning)

- Determine required resources (sensors, computational power, etc.)

📌 Conclusion

In this post, we covered six important dimensions to classify AI environments. These distinctions help us understand how complex or simple a given environment is, and what kind of agent design and algorithms are most appropriate.

A quick recap:

- Observability: Does the agent see everything?

- Number of Agents: Is it acting alone or with others?

- Determinism: Are outcomes predictable?

- Time Dependency: Do actions affect the future?

- Dynamism: Is the environment changing independently?

- State Nature: Are states/counts finite or infinite?