Types of Intelligent Agents in AI: A Technical Breakdown with Real-World Examples

In our journey through Artificial Intelligence, we’ve discussed what agents are, how they’re configured, and how frameworks like PEAS and PAGE help us model them. Now it’s time to go deeper and classify intelligent agents based on their complexity and capabilities.

In this post, we’ll explore the five major types of agents:

- Simple Reflex Agents

- Model-Based Reflex Agents

- Goal-Based Agents

- Utility-Based Agents

- Learning Agents

Each type builds on the previous, becoming more advanced and capable of handling complex environments.

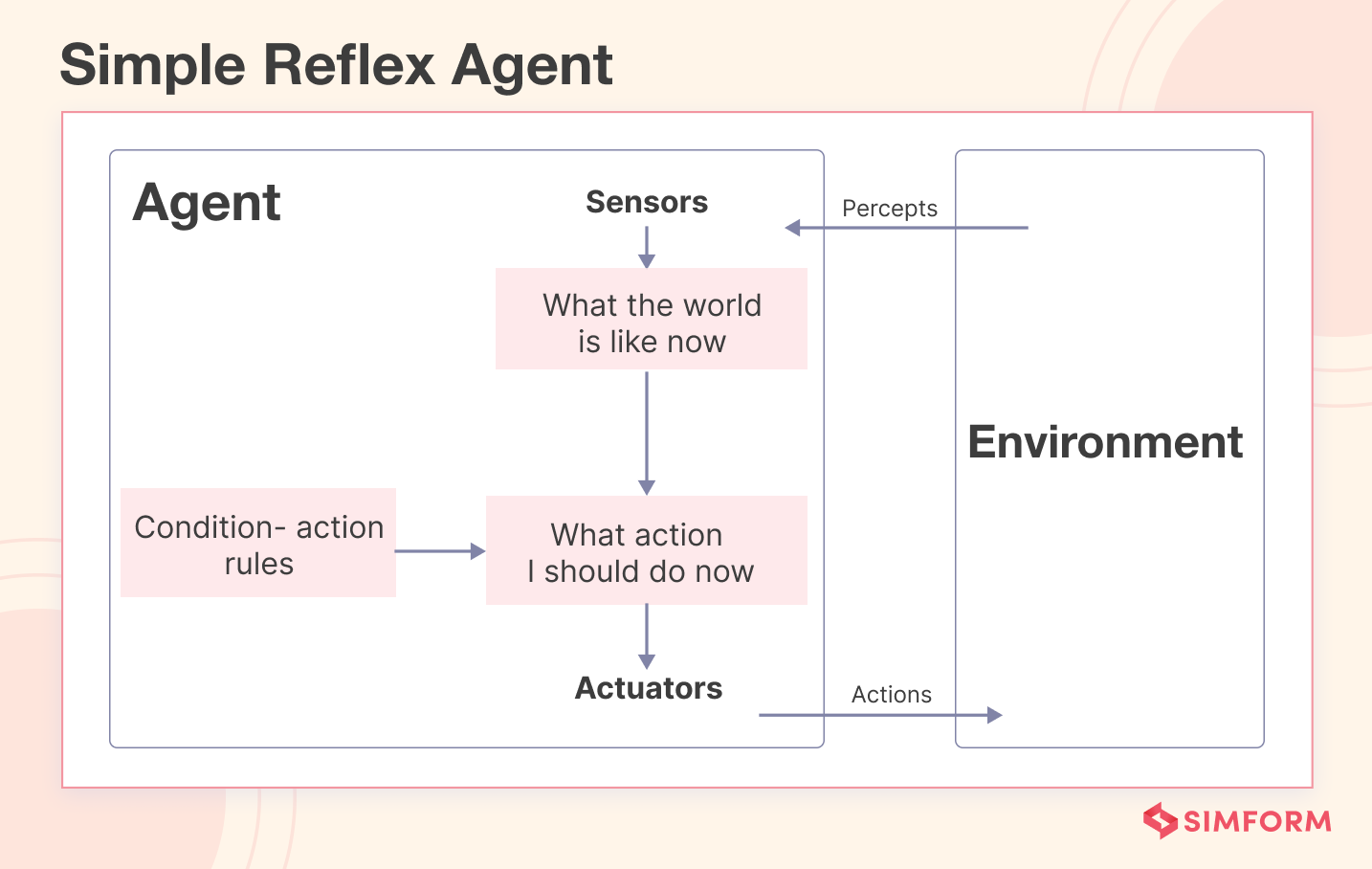

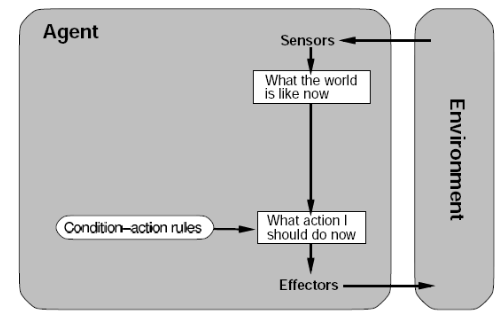

Simple Reflex Agents

Technical Explanation:

A Simple Reflex Agent selects actions based solely on the current percept (the current state of the environment). It follows a set of condition-action rules:

“If condition, then action.”

- No memory of the past

- Works only if the environment is fully observable

- Cannot deal with unseen situations or partial information

Real-World Example:

Thermostat:

- Condition: If temperature > 25°C

- Action: Turn off heater

The thermostat has no memory or understanding of how the temperature changed — it just reacts.

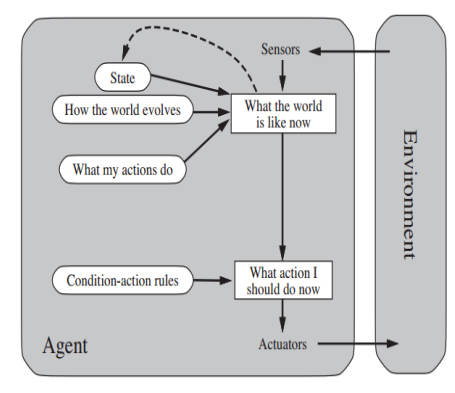

Model-Based Reflex Agents

Technical Explanation:

A Model-Based Reflex Agent improves upon the simple reflex agent by maintaining an internal model of the world. This allows it to work in partially observable environments.

It uses the current percept and its internal state (memory) to make decisions.

Real-World Example:

Robotic Vacuum Cleaner (e.g., Roomba):

- Keeps track of which areas have been cleaned.

- Uses sensors to detect dirt and obstacles.

- Uses internal memory to avoid repeating actions unnecessarily.

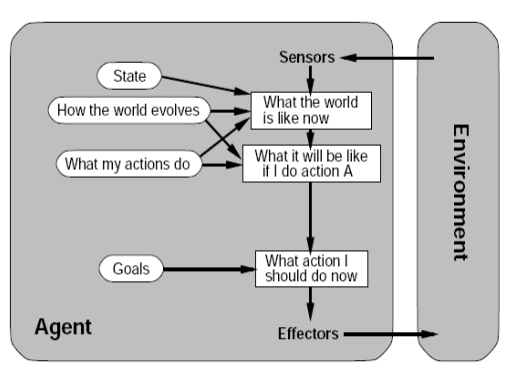

Goal-Based Agents

Technical Explanation:

Goal-Based Agents go beyond reacting to the environment. They choose actions that will help them achieve a specific goal.

- Use search and planning algorithms to explore different possibilities

- Can make decisions based on future consequences

- More flexible than reflex agents

Real-World Example:

Self-Driving Car (Goal: Reach Destination Safely)

- Perceives road, traffic, and obstacles

- Plans route and makes driving decisions to reach destination

- Adjusts actions dynamically to avoid accidents or traffic

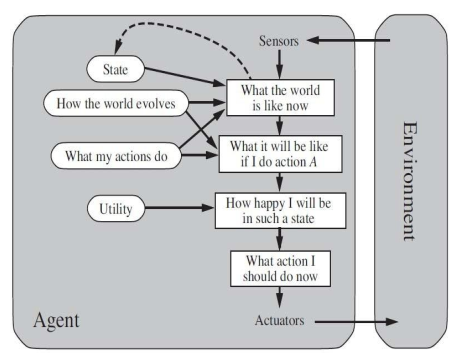

Utility-Based Agents

Technical Explanation:

Utility-Based Agents consider multiple possible goals and evaluate them using a utility function to choose the most beneficial action.

- Uses a quantitative measure of success (e.g., score, cost, time)

- Capable of weighing trade-offs between options

- More sophisticated decision-making than goal-based agents

Real-World Example:

Stock Trading Bot

- Goal: Maximize returns

- Considers multiple investments

- Uses utility function to evaluate risk vs. reward and chooses the best one

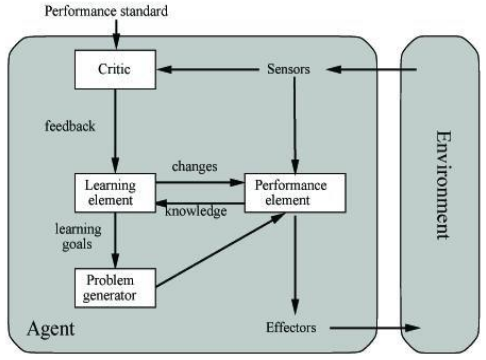

Learning Agents

Technical Explanation:

Learning Agents can learn from experience and improve performance over time. They can modify their behavior based on feedback, which makes them adaptive.

- Includes all previous components plus a learning module

- Uses techniques like reinforcement learning or supervised learning

- Can update internal models, rules, or utility functions

Real-World Example:

AI in Video Games (e.g., OpenAI’s Dota 2 bot)

- Learns from gameplay

- Adapts strategies based on opponent behavior

- Continuously improves over thousands of iterations

| Agent Type | Memory | Goal-Oriented | Learns? | Example |

|---|

| Simple Reflex | ❌ | ❌ | ❌ | Thermostat |

| Model-Based | ✅ | ❌ | ❌ | Robotic Vacuum |

| Goal-Based | ✅ | ✅ | ❌ | Self-Driving Car |

| Utility-Based | ✅ | ✅ | ❌ | Stock Trading Bot |

| Learning | ✅ | ✅ | ✅ | Game AI Bot |

Understanding the types of intelligent agents helps us appreciate how artificial systems evolve in complexity—from basic reflex-based actions to advanced self-learning behaviors. Each type has its own application, strength, and limitations, and is suitable for different kinds of tasks.